The 3V volume, variety, velocity,veracity,value Story:

Datawarehouses maintain data loaded from operational databases using Extract Transform Load ETL tools like informatica, datastage, Teradata ETL utilities etc…

Data is extracted from operational store (contains daily operational tactical information) in regular intervals defined by load cycles. Delta or Incremental load or full load is taken to datwarehouse containing Fact and dimension tables which are modeled on STAR (around 3NF )or SNOWFLAKE schema.

During business Analysis we come to know what is granularity at which we need to maintain data. Like (Country,product, month) may be one granularity and (State,product group,day) may be requirement for different client. It depends on key drivers what level do we need to analyse business.

There many databases which are specially made for datawarehouse requirement of low level indexing, bit map indexes, high parallel load using multiple partition clause for Select(during Analysis), insert( during load). data warehouses are optimized for those requirements.

For Analytic we require data should be at lowest level of granularity.But for normal DataWarehouses its maintained at a level of granularity as desired by business requirements as discussed above.

for Data characterized by 3V volume, velocity and variety of cloud traditional datawarehouses are not able to accommodate high volume of suppose video traffic, social networking data. RDBMS engine can load limited data to do analysis.. even if it does with large not of programs like triggers, constraints, relations etc many background processes running in background makes it slow also sometime formalizing in strict table format may be difficult that’s when data is dumped as blog in column of table. But all this slows up data read and writes. even is data is partitioned.

Since advent of Hadoop distributed data file system. data can be inserted into files and maintained using unlimited Hadoop clusters which are working parallel and execution is controlled byMap Reduce algorithm . Hence cloud file based distributed cluster databases proprietary to social networking needs like Cassandra used by facebook etc have mushroomed.Apache hadoop ecosystem have created Hive (datawarehouse)

http://sandyclassic.wordpress.com/2011/11/22/bigtable-of-google-or-dynamo-of-amazon-or-both-using-cassandra/

With Apache Hadoop Mahout Analytic Engine for real time data with high 3V data Analysis is made possible. Ecosystem has evolved to full circle Pig: data flow language,Zookeeper coordination services, Hama for massive scientific computation,

HIPI: Hadoop Image processing Interface library made large scale image processing using hadoop clusters possible.

http://hipi.cs.virginia.edu/

Realtime data is where all data of future is moving towards is getting traction with large server data logs to be analysed which made Cisco Acquired Truviso Rela time data Analytics http://www.cisco.com/web/about/ac49/ac0/ac1/ac259/truviso.html

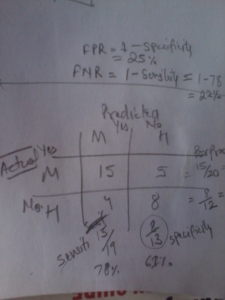

Analytic being this of action: see Example:

http://sandyclassic.wordpress.com/2013/06/18/gini-coefficient-of-economics-and-roc-curve-machine-learning/

with innovation in hadoop ecosystem spanning every direction.. Even changes started happening in other side of cloud stack of vmware acquiring nicira. With huge peta byte of data being generated there is no way but to exponentially parallelism data processing using map reduce algorithms.

There is huge data out yet to generated with IPV6 making possible array of devices to unique IP addresses. Machine to Machine (M2M) interactions log and huge growth in video . image data from vast array of camera lying every nuke and corner of world. Data with a such epic proportions cannot be loaded and kept in RDBMS engine even for structured data and for unstructured data. Only Analytic can be used to predict behavior or agents oriented computing directing you towards your target search. Bigdatawhich technology like Apache Hadoop,Hive,HBase,Mahout, Pig, Cassandra, etc…as discussed above will make huge difference.

Some of the technology to some extent remain Vendor Locked, proprietory but Hadoop is actually completely open leading the the utilization across multiple projects. Every product have data Analysis have support to Hadoop. New libraries are added almost everyday. Map and reduce cycles are turning product architecture upside down. 3V (variety, volume,velocity) of data is increasing each day. Each day a new variety comes up, and new speed or velocity of data level broken, records of volume is broken.

The intuitive interfaces to analyse the data for business Intelligence system is changing to adjust such dynamism since we cannot look at every bit of data not even every changing data we need to our attention directed to more critical bit of data out of heap of peta-byte data generated by huge array of devices , sensors and social media. What directs us to critical bit ? As given example

http://sandyclassic.wordpress.com/2013/06/18/gini-coefficient-of-economics-and-roc-curve-machine-learning/

for Hedge funds use hedgehog language provided by :

http://www.palantir.com/library/

such processing can be achieved using Hadoop or map-reduce algorithm. There are plethora of tools and technology which are make development process fast. New companies are coming from ecosystem which are developing tools and IDE to make transition to this new development easy and fast.

When market gets commodatizatied as it hits plateu of marginal gains of first mover advantage the ability to execute becomes critical. What Big data changes is cross Analysis kind of first mover validation before actually moving. Here speed of execution will become more critical. As production function Innovation givesreturns in multiple. so the differentiate or die or Analyse and Execute feedback as quick and move faster is market…

This will make cloud computing development tools faster to develop with crowd sourcing, big data and social Analytic feedback.